Lazy loading isn't just lazy, it's late: the web deserves faster.

A manifesto for proactive loading in Angular and other SPAs and why lazy-loading isn't just lazy: it's late. The web deserves faster.

For time immemorial web developers have been guided to focus on minimising bandwidth consumption. Or, comparatively recently, loading content users can see as early as possible and by definition not loading contents users can't see, this is colloquially known as lazy-loading.

But it's just that: lazy. We tend only to fetch more content as the user scrolls it into view or navigates to the next page, which isn't just lazy: it's often late and an awful user experience.

Lazy loading isn't just lazy: it's often late.

It's fair to suggest lazy-loading is primarily designed to ensure your initial site load is fast and not a morsel of bandwidth or compute is wasted on anything that could put your Core Web Vitals into the amber or - heaven forbid - the red. Which is perfectly reasonable and eminently sensible.

But, what next? What happens when a user moves to the next route? Core Web Vitals can't measure a soft-navigation (for now) and ergo we don't talk about what comes next nearly enough.

But let's come to back to Core Web Vitals later. How did we get here?

A short history on bandwidth consumption

We've come a long way since 14.4k baud modems (my personal first) when BBS's and ASCII art ruled. And if you think about it all our media consumption is driven, to some extent, by the delivery medium of the day.

BBS's were necessarily text based. Downloading music (not streaming I hasten to add) only became practical when the MP3 format came into being. Netflix started life as a postal service. And then, here we are today, where downloading games like Flight Simulator 2020 - a whopping 100Gb+ - or streaming 4k movies is considered normal.

Juxtapose streaming a 4K movie against modern user-centric web guidance, web apps are so parsimonious in comparison.

Did you know you can download the entirety of Wikipedia in around 19Gb but your typical 90 minute 4K movie runs to around 21Gb? Fact is, you could download many entire websites in a few minutes. Maybe less.

Boiled Frog Syndrome

You've doubtless heard of Boiled Frog Syndrome? It's a metaphor for the idea that if change is gradual enough you'll fail to react until it's too late.

I occasionally bump into web developers who think there's still a 6 concurrent connection limit per domain. Last week when talking to content editors about low-resolution image it turned out they didn't know about the Device Pixel Ratio. Not that surprising. But how many of us realise how much crisper images can look on a modern mobile device?

Maybe your experience is different but my hypothesis is that many in the web ecosystem are still optimising like it's 2015 and haven't noticed the explosion in available bandwidth.

Little wonder native applications are considered a premium experience and progressive web apps (PWAs) remain somewhat in the doldrums.

The problem with Core Web Vitals and SPAs

Whilst it's right and proper that users' initial experience is a good one, because when it comes to a single-page application (SPA) there is no good universal way to measure what happens next, that experience has been sorely neglected.

Yes, there's now INP, but that's about ensuring the web app responds in someway to the users tap or click, if you visually respond with something - anything - you pass. If it takes another 10 seconds to complete the soft-navigation that's simply not captured as of today.

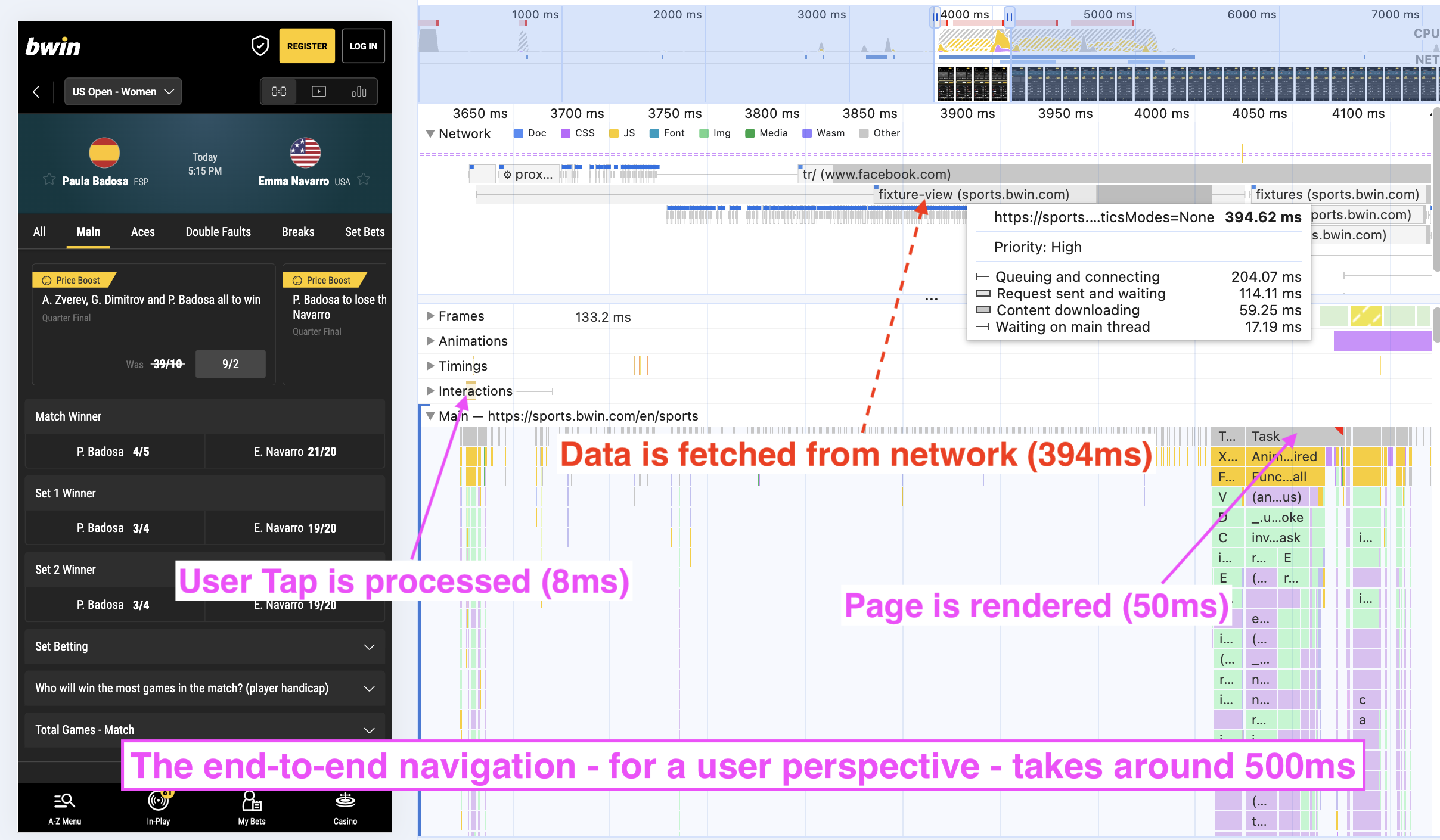

Having gone through a long journey optimising the Angular SPA I work on, we've followed almost every last piece of best practise including but not limited to pre-loading; lazy-loading; async loading; code splitting; bundle rollups; getting rid of extraneous change detection cycles; edge caching; other types of caching; stale-while-revalidate; algorithm optimisation; image optimisation; compression; minification; moving tracking and 3rd parties to a worker-thread via PartyTown; and countless other micro-optimisations.

Yet those soft-navigations still weren't fast enough. When you tapped there was a perceptible delay before you were at your destination.

Why? Why, because in our app - and many others I suspect - they still needed to collect the data required to show the next page and that meant a round-trip to the content delivery network (CDN) or even the Origin. And with lazy loading of routes, the first time you show a new route you probably need to download, parse, and compile some JavaScript. Only then would you know what data you needed, then you'd have to fetch it, and only then could you render it.

Buffering

Everyone knows what buffering is these days. Why? Because when it doesn't work and you're streaming the latest episode of Rick & Morty you get an intensely frustrating spinning wheel. Worse still, no real way of knowing when it'll disappear so you can continue your experience.

But that's the default experience of the web. There is no buffering per se. Spinning wheels and skeleton loading screens run amok. For many, what is needed next is only retrieved after you tap. That's simply too late.

Put it all together and the chance of that navigation happening end-to-end such that it feels instant (<200ms), is unlikely.

Think about it, strip everything else away, and the typical out-of-the-box framework would have the following occur:

- User taps on a new route

- A JavaScript module needs to be downloaded, parsed, and compiled.

- The data for the route needs to be fetched

- ...

Doesn't that already sound wrong? Surely we could've already found an idle moment to do this that wouldn't have hurt the users' experience.

On the contrary it could have been proactive. After all, that's what requestIdleCallback is for! Remember we haven't even got the content yet let alone rendered it. Lazy, late, whichever way you cut it we're doing and thinking about this in one-dimension, that of initial loading time.

Could it be we've gone too far with lazy loading and we need to compensate for what we removed from the initial load by proactively loading or buffering what comes next?

Prior Art

Solutions exists in the form of ngx-quicklink (for Angular): it automatically downloads the lazy-loaded modules associated with all the visible links on the screen. But it's fiddlesome relying on routes being specified in a certain way. Not to mention ignores the fact that there tends to be data that also needs to be fetched.

There are libraries like Guess.js that figure out where your users are likely to click next based on analytics and machine-learning to tell you what to prefetch. But then, how do you do that?

The Speculation Rules API while experimental is not for SPAs, only intended for multi-page applications (MPAs). The problem is obviously understood but solutions are hard to come by.

We need to fetch that data proactively. And to the best of my knowledge there is no way out-of-the-box to do that that's widely discussed. Yet, even one round-trip even on a good connection, is likely to risk that soft-navigation not feeling particularly instant.

Maybe I've missed something, but my gut is that it's way too hard to give users that instant navigation experience, to the detriment of much of the web.

A possible solution for Angular and other SPAs

To proactively fetch that data what needs to be true:

- We need to know the URLs for the data needed to render those routes.

- There needs to be some caching mechanism available such that the data is readily available when it's time to render it.

Proactive soft navigations

Angular has the concept of route resolvers. Primarily it's designed to ensure all the data required to render the page is available prior to you beginning to render it, so you can avoid things like CLS.

So close. I mean, really close. Effectively this route resolver does exactly what we need: it figures out what data is needed and fetches it prior to the commencement of rendering.

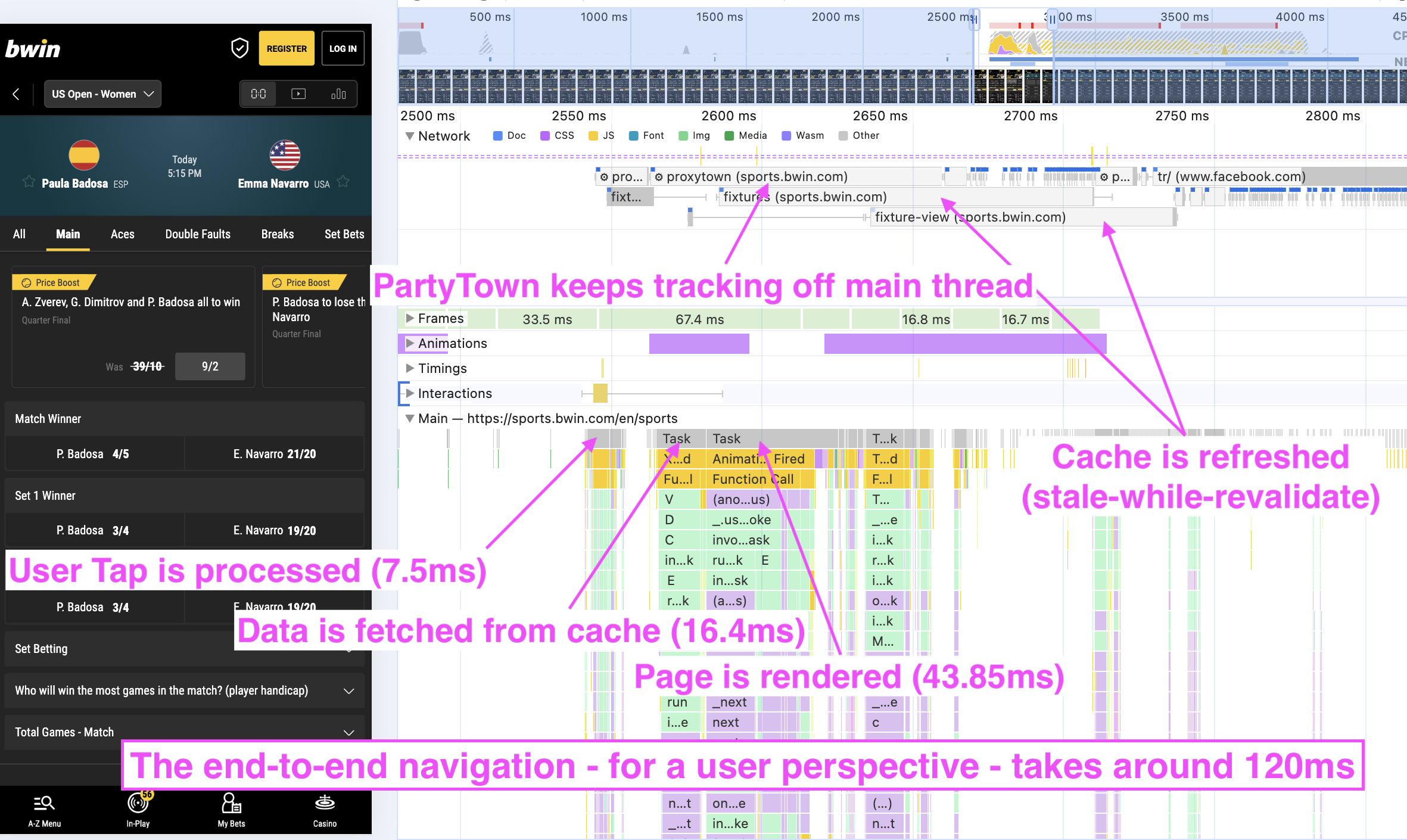

This is where in our app we introduced proactive soft navigations: we figured out how to run the route resolvers for links in the viewport using an interaction observer. No rendering or anything that would consume CPU or really even block the main thread.

We had a ready-made caching mechanism in the form of a regular browser cache; the web is really good at that. Using a CDN meant the round-trip time was minimised and our Origin servers weren't hurt by the inevitable uptick in requests as we fetched data that, frankly, may never be used.

Throw in stale-while-revalidate and there's even a way to make sure the cache stays fresh but in an async manner.

Look at that timeline now:

- A JavaScript module is downloaded, parsed, and compiled during an idle moment when the route that uses it is in the viewport.

- We figure out what data the route needs and proactively fetch it (effectively buffering it for later).

- The user taps on a new route.

- We render it.

Doesn't that feel better? When the user taps we now have everything required, up front, and typically that rendering can be done such that it feels instant. Even on a middle of the road Android device on an average connection, it works.

Result: our navigations are now ~75% faster

Do you want to see what it looks like? Depending on your jurisdiction and assuming you're over 21 years of age visit the Sportsbooks at BetMGM, or bwin, or Sportingbet. Or just enjoy these flame graphs instead.

Interaction Observer triggering the Angular Route Resolver

Time to Navigate: Before

Time to Navigate: After

What are we left to do in our implementation at the time of writing? Handle page visibility: how do we handle refreshing content after regaining focus when the user returns to the tab or foregrounds the application?

Edit: of course one needs to be mindful of users who have Save Data enabled. The above isn't right for everyone, especially those who explicitly want to save data, not everyone has unlimited bandwidth.

Epilogue

I might be wrong, or maybe I've missed something, but either way I think this topic is so rarely discussed it drove me to write about it. Maybe other component libraries or frameworks have a ready-made out-the-box way to do this, answers on a postcard if so.

As I wrote earlier, I predict once Core Web Vitals, browsers, and frameworks agree a universal way to measure soft-navigations there will be a renaissance in how we handle them.

Hopefully this time around the answer might not be to only minify, reduce, remove, split, and otherwise be lazy. Maybe the answer will also involve being more proactive to give a better user experience, not to mention an easier developer experience.

Huge thanks to Push Based and Christopher Holder in particular who worked on the implementation with me. If you ever need Angular expertise don't hesitate to call them.